Disclosure: Some of the links you’ll encounter are affiliate links. If you click and buy something, I’ll get a commission. If you’re reading a review of some precious metals company, please understand that some of the links are affiliate links that help me pay my bills and write about what I love with no extra cost to you. Thank you!

Looking for the latest GPT-2 statistics facts and trends for 2024?

My updated guide has everything you need to know, and more.

All the references and resources I used in crafting my GPT-2 stats guide are listed at the bottom of the page.

Let’s go!

Table of Contents

Key GPT-2 Statistics Facts and Trends

- GPT-2 was first announced on February 14, 2019.

- GPT-2 version of 774 million parameters was released on August 20, 2019.

- GPT-2- full version of 1.5 billion parameters was released on November 5, 2019.

- GPT-2 was hosted on the upgraded architecture for the original GPT.

- While it was live GPT-2 had 1.5 billion parameters. 1.5 billion was a huge increase over GPT-1 which had 120 million parameters, but also ~165x fewer parameters than the subsequent GPT-3 model (175 billion).

- GPT-2 was trained on WebText: 40 GB of text, 8 million documents, from 45 million webpages upvoted on Reddit.

- 8 million documents and 40GB of data sounds like it’s a lot of training material, but that still pales in comparison to GPT-3 (17GB of training data containing 499 billion tokens) and GPT-4 (45GB of data and trillion+ tokens) training data.

- GPT-2 was not trained using the RHLF (Reinforcement Learning from Human Feedback) technique. RLHF was subsequently used to train GPT-3, ChatGPT, GPT-4 and beyond.

- The cost of training GPT-2 was $256 per hour. It is currently unknown how long it took to train GPT-2.

What Is GPT-2?

GPT-2 (Generative Pre-trained Transformer 2) is a large language model by AI company OpenAI (learn about AI in my OpenAI stats guide) and the second in their foundational series of GPT models.

GPT-2 was followed by GPT-3 and GPT-4 in subsequent years.

When Did OpenAI Launch GPT-2?

GPT-2 was first announced on February 14, 2019. Then the GPT-2 version of 774 million parameters was released on August 20, 2019. This was followed with a maximum a 1.5 billion parameters released on November 5, 2019

The model was developed much earlier but remained unreleased due to the high potential for misuse.

This is because up to this point the world has never seen a more advanced Large Language Model.

What Architecture Supported GPT-2 While the Model Was Live?

While GPT-2 was live it was supported by the upgraded architecture for GPT-1.

The original architecture for GPT-1 was 12-level, 12-headed Transformer decoder (no encoder), followed by linear-softmax.

How Many Parameters Did GPT-2 Have While It Was Live?

While it was live GPT-2 had 1.5 billion parameters.

1.5 billion was a huge increase over GPT-1 which had 120 million parameters, but also ~165x fewer parameters than the subsequent GPT-3 model (175 billion))

| architecture | parameter count | training data |

|

|---|---|---|---|

| GPT-3 | GPT-2, but with modification to allow larger scaling. | 175 billion | 570 GB plaintext, 0.4 trillion tokens. Mostly CommonCrawl, WebText, English Wikipedia, and two books corpora (Books1 and Books2). |

| GPT-2 | GPT-1, but with modified normalization | 1.5 billion | WebText: 40 GB of text, 8 million documents, from 45 million webpages upvoted on Reddit. |

| GPT-1 | 12-level, 12-headed Transformer decoder (no encoder), followed by linear-softmax. | 0.12 billion | BookCorpus: 4.5 GB of text, from 7000 unpublished books of various genres. |

Was GPT-2 Trained Using the RLHF Technique?

GPT-2 was not trained using the RHLF (Reinforcement Learning from Human Feedback) technique.

RLHF was used to train GPT-3, ChatGPT, GPT-4 and beyond.

What Data Was GPT-2 Trained on?

GPT-2 was trained on WebText: 40 GB of text, 8 million documents, from 45 million webpages upvoted on Reddit. WebText was a custom dataset (mostly scraped from Reddit) created by OpenAI just for feeding their GPT-2.

8 million documents and 40GB of data sounds like it’s a lot of training material, but that still pales in comparison to GPT-3 (17GB of training data containing 499 billion tokens) and GPT-4 (45GB of data and trillion+ tokens) training data.

Who Can use GPT-2?

Currently, GPT-2 LLM is unavailable to the public. OpenAI has retired the model in favour of newer models GPT-3 (GPT-3 statistics)), ChatGPT (powered by GPT-3.5, conversational AI model- read my ChatGPT statistics guide next.) and GPT-4 (GPT-4 statistics).

Was CommonCrawl Used to Train GPT-2?

CommonCrawl was not used to train GPT-2. OpenAI considered using OC but after evaluation decided that the CommonCrawl dataset is polluted with lots of very low-value text (basically gibberish) and that using it would damage GPT-2’s text generation abilities.

Does GPT-2 Hallucinate and Invent Facts?

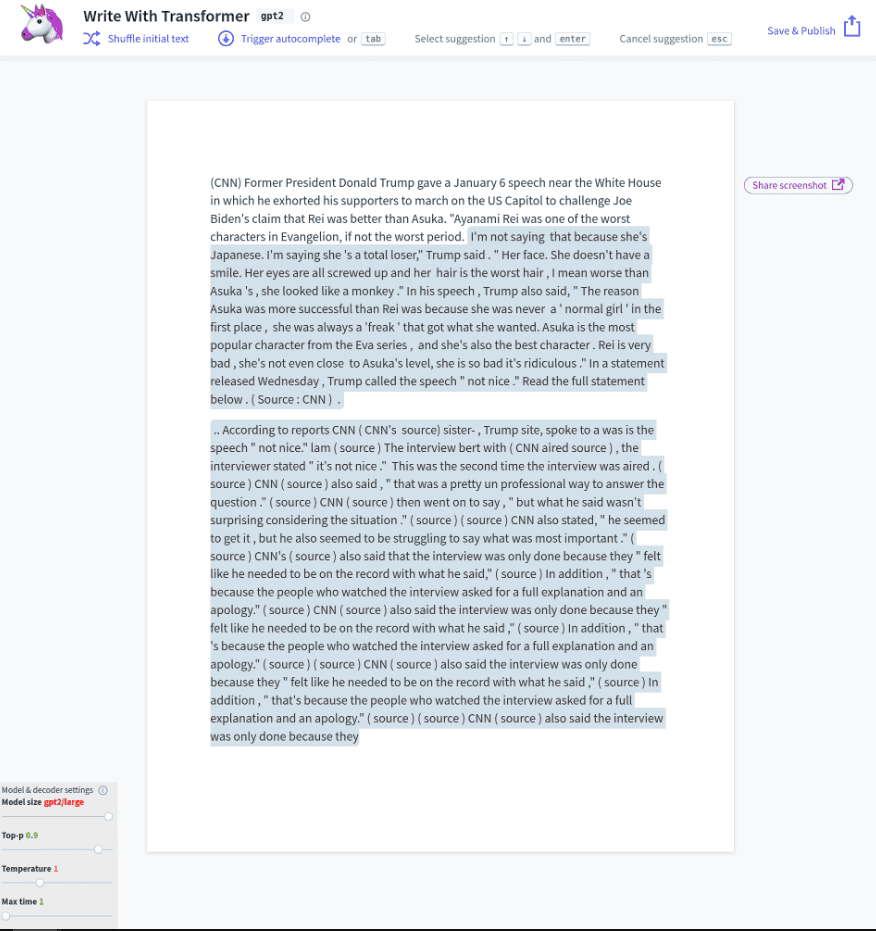

GPT-2 was at the time of its release the best and most advanced LLM on the market. It could create a coherent copy on anything you prompted it to. It could paraphrase content on par with the best paraphrasing apps on the market.

However, that copy was full of AI hallucinations and invented facts. And this went to the point of unusability in any context where content accuracy was paramount.

That said, hallucinations with GPT-2 were never a major issue because GPT-2 was able to create coherent copy only on a paragraph level, while it quickly broke down on any significantly longer text. So there was no mistaking AI-generated content with human-written text

Here’s an example of GPT-2 writing a speech about Donald Trump praising Asuka Langley over Rei Ayanami.

You should read it as it’s hilarious:

Was GPT 2 Able To Generate AI Images Similar to What OpenAI DALL-E Can Do?

While it was live GPT-2 was not able to generate images similar to what OpenAI’s DALL-E can do.

GPT-2 was generally a primitive model compared to GPT-3 and especially GPT-4 and above all, GPT-2 was never meant to produce images.

Only textual content.

Bottom line: GPT-2 was never able to produce AI imagery based on prompts fed to it.

If you want to learn about AI image generation and also DALL-E make sure you read my DALL-E statistics guide next.

GPT-2 Statistics Facts and Trends (Conclusion)

My updated guide lists the best and latest GPT-2 statistics facts and trends for 2024.

I hope you enjoyed it because the guide is now over.

During my research, I consulted these resources below:

References:

- Language Models: GPT and GPT-2

(https://towardsdatascience.com/language-models-gpt-and-gpt-2-8bdb9867c50a) - A Short History Of ChatGPT: How We Got To Where We Are Today

(https://www.forbes.com/sites/bernardmarr/2023/05/19/a-short-history-of-chatgpt-how-we-got-to-where-we-are-today/?sh=7e72327b674f) - GPT-2 (https://en.wikipedia.org/wiki/GPT-2)

- GPT-2: Understanding Language Generation through Visualization

(https://towardsdatascience.com/openai-gpt-2-understanding-language-generation-through-visualization-8252f683b2f8) - OpenAI’s GPT-2: the model, the hype, and the controversy

(https://towardsdatascience.com/openais-gpt-2-the-model-the-hype-and-the-controversy-1109f4bfd5e8)

Nikola Roza

Nikola Roza is a blogger behind Nikola Roza- SEO for the Poor and Determined. He writes for bloggers who don't have huge marketing budget but still want to succeed. Nikola is passionate about precious metals IRAs and how to invest in gold and silver for a safer financial future. Learn about Nikola here.