Disclosure: Some of the links you’ll encounter are affiliate links. If you click and buy something, I’ll get a commission. If you’re reading a review of some precious metals company, please understand that some of the links are affiliate links that help me pay my bills and write about what I love with no extra cost to you. Thank you!

Looking for the latest GPT-3 statistics facts and trends for 2023?

My updated guide has everything you need to know, and more.

All the references and resources I used in crafting my GPT-3 stats guide are listed at the bottom of the page.

Let’s go!

- GPT-3 was launched on June 11, 2020.

- GPT-3 is 117x more powerful than GPT-2.

- GPT-3 held the record for the largest data corpus, with 17GB of training data containing 499 billion tokens. Now GPT 3 is far surpassed by its successor model GPT-4 which was trained on 45GB of data and trillion+ tokens.

- GPT-3 boasts an impressive 175 billion trainable parameters. This is still formidable given that GPT-4 is predicted to have ~200 billion trainable parameters. So the difference between the two models is ~25 billion parameters.

- Earlier NLP models like ELMo had 94 million parameters, BERT had 34 million, GPT-2 had 1.5 billion, and Turing NLG had 17 billion. GPT-3 blows them all out of the water.

- GPT-3’s training involved a massive dataset of 499 billion tokens, equivalent to 700GB in size.

- More than 300 apps were connected to GPT-3 API while it was available. However, OpenAI has now closed GPT-4 to the public and instead they’re heavily promoting GPT-4.

- As of March 2021, GPT-3 generates an average of 4.5 billion words per day.

- Algolia tested GPT-3 on 2.1M news articles and got 91% precision.

Table of Contents

GPT-3 Model Resources and Parameters Facts

- Over the past decade, the deep learning model’s training resources have doubled every 3.4 months. Between 2012 and 2018, there was a remarkable 300,000 times increase in computational resources.

- GPT-3 used to hold the record for the largest data corpus, with 17GB of training data containing 499 billion tokens. Now GPT 3 is far surpassed by its successor model GPT-4 which was trained on 45GB of data and trillion+ tokens.

- GPT-3 boasts an impressive 175 billion trainable parameters. This is still formidable given that the next model GPT-4 is predicted to have ~200 billion trainable parameters.

- Demonstrating its disruptive potential, GPT-3 can automate approximately 70% of software development.

- Earlier NLP models like ELMo had 94 million parameters, BERT had 34 million, GPT-2 had 1.5 billion, and Turing NLG had 17 billion. GPT-3 blows them all out of the water.

- GPT-3 contains more than 100 times more parameters than its predecessor, GPT-2. GPT-2 had 1.5 billion parameters, while GPT-3 has 175 billion trainable parameters.

- Furthermore, GPT-3 has 10 times more parameters than Microsoft’s Turing NLG model (17 billion parameters).

- The capacity of GPT-n models is significantly enhanced by three orders of magnitude with GPT-3.

- GPT-3 is 117 times more complex than GPT-2.

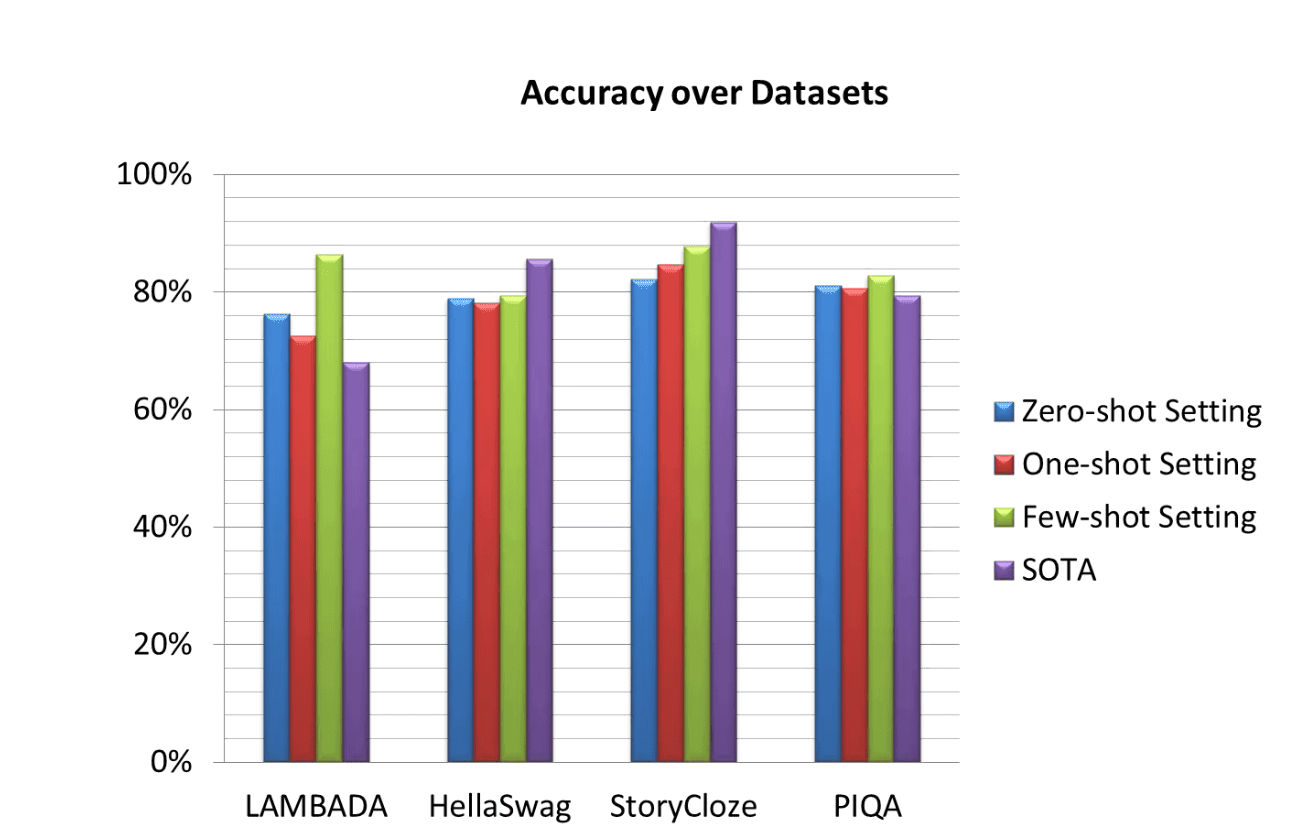

- GPT-3 demonstrated an 8% efficiency improvement over the state-of-the-art (SOTA) on the LAMBDA dataset.

- Compared to SOTA’s 60% accuracy for two-digit addition and subtraction, the GPT-3 Fine-tuned model achieved 100% accuracy.

- In answering complex natural language questions, the GPT-3 based Algolia outperformed BERT by 4 times.

- As of November 2021, Microsoft announced a large language model called Megatron-Turing NLG, featuring 530 billion parameters.

GPT-3 Model Architecture Facts

The GPT-3 transformer-based model boasts a massive architecture which is divided into submodels.

- GPT-3 has 8 models based on parameter sizes ranging from 125 million to 175 billion

- The transformer layers range from 12 to 96.

- The learning rate changes from 6.0 × 10−4 to 0.6 × 10−4.

| Model | Trainable Parameters | Transformer Layers | Bottleneck Layer Units | Attention Layers | Attention Head Dimension | Batch Size | Learning Rate |

|---|---|---|---|---|---|---|---|

| GRT-3 Small | 125M | 12 | 768 | 12 | 64 | 0.5M | 6.0 × 10−4 |

| GRT-3 Medium | 350M | 24 | 1024 | 24 | 64 | 0.5M | 3.0 × 10−4 |

| GRT-3 Large | 760M | 24 | 1536 | 24 | 96 | 0.5M | 2.5 × 10−4 |

| GRT-3 XL | 1.3B | 24 | 2048 | 24 | 128 | 1M | 2.0 × 10−4 |

| GRT-3 2.7B | 2.7B | 32 | 2560 | 32 | 80 | 1M | 1.6 × 10−4 |

| GRT-3 6.7B | 6.7B | 32 | 4096 | 32 | 128 | 2M | 1.2 × 10−4 |

| GRT-3 13B | 13.0B | 40 | 5140 | 40 | 128 | 2M | 1.0 × 10−4 |

| GRT-3 175B | 175.0B | 96 | 12288 | 96 | 128 | 2M | 0.6 × 10−4 |

GPT-3 Model Training Statistics

Here’s the data GPT-3 was trained on.

- GPT-3’s training involved a massive dataset of 499 billion tokens, equivalent to 700GB in size.

- Common Crawl contributed the largest portion at 60%. Common Crawl’s dataset consists of diverse information (data gathered from all niches) collected via web crawling over the span of several years,

- The second biggest contributor is WebText2, accounting for 22% of training data. Note: WebText2 encompasses data sourced from outbound Reddit links. This is the reason why Reddit is currently banning free scraping of their subreddits as they don’t want AI companies to train their chatbots for free.

- Additionally, Books1 and Books2 together represent 16% of the dataset, encompassing corpora from books freely available on the web. You can bet GPT-3 has read all the classics you can and can’t think of:)

- Lastly, Wikipedia makes up 3% of the training data, comprising information extracted from English Wikipedia pages. Currently, there’s a big debate inside Wikipedia on whether AI is going to destroy Wikipedia or power it up to unimaginable heights.

GPT-3 Performance and Accuracy Statistics

The performance and accuracy of GPT-3 have been extensively evaluated across various existing datasets, and the noteworthy performance statistics are shown in the table below.

| Dataset | Zero-shot Setting | One-shot Setting | Few-shot Setting | SOTA |

|---|---|---|---|---|

| HellaSwag | 78.9% | 78.1% | 79.3% | 85.6% |

| LAMBADA | 76.2% | 72.5% | 86.4% | 68% |

| PhysicalQA (PIQA) | 81.0% | 80.5% | 82.8% | 79.4% |

| StoryCloze | 82.2% | 84.7% | 87.7% | 91.8% |

GPT-3 Business Model Statistics

- The training of GPT-3 demands an enormous amount of computational power, requiring approximately 3.114×10^23 FLOPS (floating-point operations). This training process costs $4.6 million when utilizing a Tesla V100 cloud instance at a rate of $1.5 per hour and takes 355 GPU-years

- Because GPT-3 cannot be trained on a single GPU and requires a distributed system, this results in a 1.5x to 5x increase in the cost of training the final model.

- The research and development (R&D) cost of GPT-3 is estimated to range from $11.5 million to $27.6 million, excluding expenses related to parallel GPUs, salaries, and submodel costs.

- Parallelly, GPT-3 requires a minimum of 11 Tesla V100 GPUs, each with 32GB memory, summing up to $99,000 for the GPU cluster alone, without accounting for RAM, CPU, SSD drives, and power supply costs.

- The GPT-3 model itself costs around $12.6 million, and to load and run inference, it necessitates at least 350GB of VRAM (half-precision FLOP at 16 bits per parameter), making the VRAM requirements exceed 400GB.

- For hardware costs of running GPT-3, the estimated range is $100,000 to $150,000, excluding expenses for power supply, cooling, and backup costs.

- To achieve solid performance on GPT-3, a baseline Nvidia’s DGX-1 server with VRAM (8×16GB) costs approximately $130,000, including all other necessary components.

- When deployed in the cloud, GPT-3 requires Amazon’s p3dn.24xlarge instance, equipped with 8x Tesla V100 GPUs (32GB), 768GB RAM, and 96 CPU cores, at an hourly cost of $10 to $30, amounting to a minimum annual expense of $87,000 .

- GPT-3’s supercomputer, hosted in Microsoft’s Azure cloud, is comprised of 285,000 CPU cores and 10,000 high-end GPUs.

GPT-3 Pricing

GPT3 pricing is unavailable. OpenAI no longer sells access to the GPT-3 technology because now their main sellers are ChatGPT (run on GPT-3.5) and GPT-4.

ChatGPT Plus costs $20/mo and GPT-4 pricing is:

GPT-4 model with 8k context lengths (e.g. gpt-4 and gpt-4-0314):

- $0.03/1k prompt tokens

- $0.06/1k sampled tokens

GPT-4 model 32k context lengths (e.g. gpt-4-32k and gpt-4-32k-0314):

- $0.06/1k prompt tokens

- $0.12/1k sampled tokens

Again, GPT-3 model is retired and you can’t have access to it. The only way to access GPT-3 is to use AN AI writing tool that still runs on GPT-3, but at this point 90% of AI writing tools on the market run on GPT-4.

GPT-3 Statistics Facts and Trends 2023 FAQ

#1- Does GPT-3 Cost Money?

OpenAI used to price access to GPT-3 API.

However, OpenAI no longer sells access to GPT-3 and you can’t buy access to GPT-3 anymore.

Learn about OpenAI by reading my OpenAI statistics post next.

#2- What Does GPT-3 Stand for?

GPT stands for generative pre-trained transformers and 3 means it’s the third version of the flagship OpenAI product.

#3- Can I Download GPT-3?

You can’t download GPT-3 because of two reasons.

First, GPT-3 is no longer available publicly. It’s an older large language model that is supplanted by ChatGPT (ChatGPT is powered by GPT-3.5- read my ChatGPT stats guide next) and GPT-4.

Second, you were never able to download GPT-3. GPT-3 was never computer software. Instead in was accessible via the Cloud and you could access it by logging into your OpenAI account.

#4- Can I Talk to GPT-3?

You can talk to GPT-3 by giving it textual prompts and instructions. GPT-3 will respond to you in a human-like way.

You can’t talk audibly to GPT-3 and hope for it to understand you. You can do that now to Microsoft Bing (Microsoft Bing stats) Bing Chat (Bing Chat stats) which is powered by OpenAI GPT-4.

#5- How Do GPT-3 and GPT-4 Compare?

GPT-3 is an earlier model and GPT-4 is far more advanced. That said, while GPT-3 was trained on 175 billion parameters, GPT-4 was trained on ~200 billion.

So it’s not that big of a difference, but the GPT-4 training was more efficient. Learn about GPT-4 by reading my GPT-4 statistics guide next!

| Simulated exams | GPT-3.5estimated percentile | GPT-4 estimated percentile | GPT-4 (no vision) estimated percentile |

|---|---|---|---|

| Graduate Record Examination (GRE) Writing | 4 / 6~54th | 4 / 6~54th | 4 / 6~54th |

| Graduate Record Examination (GRE) Quantitative | 147 / 170~25th | 163 / 170~80th | 157 / 170~62nd |

| Graduate Record Examination (GRE) Verbal | 154 / 170~63rd | 169 / 170~99th | 165 / 170~96th |

| LSAT | 149~40th | 163~88th | 161~83rd |

| SAT Evidence-Based Reading & Writing | 670 / 800~87th | 710 / 800~93rd | 710 / 800~93rd |

| SAT Math | 590 / 800~70th | 700 / 800~89th | 690 / 800~89th |

| Uniform Bar Exam (MBE+MEE+MPT)1 | 213 / 400~10th | 298 / 400~90th | 298 / 400~90th |

#6- Is GPT-3 Free for Personal Use?

GPT-3 used to be free for personal use. You could just log in to OpenAI and start using GPT-3.

Moreover, access to GPT-3 is no longer available, even for personal use.

#7- Can I Use GPT-3 for My Business?

You could’ve used GPT-3 for your business while it was publicly available. However, GPT-3 is no longer available and you can’t use it for your business.

GPT-3 Statistics Facts and Trends 2023 FAQ - All Your Questions Answered! #stats #stat #statistics #facts #trends Click To TweetGPT-3 Statistics Facts and Trends 2023 (Conclusion)

My updated guide lists the best and latest GPT-3 statistics facts and trends for 2023.

I hope you enjoyed it because the guide is now over.

During my research, I consulted these resources below:

References:

- (https://www.reddit.com/r/privacy/comments/12r1tjk/reddit_to_start_charging_for_api_access_so_ai/)

- AI is killing the old web, and the new web struggles to be born

(https://www.theverge.com/2023/6/26/23773914/ai-large-language-models-data-scraping-generation-remaking-web) - ‘Not for Machines to Harvest’: Data Revolts Break Out Against A.I.

(https://www.nytimes.com/2023/07/15/technology/artificial-intelligence-models-chat-data.html) - Common Crawl

(https://en.wikipedia.org/wiki/Common_Crawl) - AI Is Tearing Wikipedia Apart

(https://www.vice.com/en/article/v7bdba/ai-is-tearing-wikipedia-apart) - How much does GPT-4 cost?

(https://help.openai.com/en/articles/7127956-how-much-does-gpt-4-cost) - GPT-3 Statistics 2023: Usage, Parameters, Use Cases & More

(https://businessolution.org/gpt-3-statistics/) - OpenAI GPT-3: Everything You Need to Know

(https://www.springboard.com/blog/data-science/machine-learning-gpt-3-open-ai) - A Complete Overview of GPT-3 — The Largest Neural Network Ever Created

(https://towardsdatascience.com/gpt-3-a-complete-overview-190232eb25fd)

Nikola Roza

Nikola Roza is a blogger behind Nikola Roza- SEO for the Poor and Determined. He writes for bloggers who don't have huge marketing budget but still want to succeed. Nikola is passionate about precious metals IRAs and how to invest in gold and silver for a safer financial future. Learn about Nikola here.